| Computing one billion roots using the tangent Graeffe method   |

|

| July 18, 2020 |

|

. This paper is

part of a project that has received funding from the French

“Agence de l'innovation de défense”.

. This paper is

part of a project that has received funding from the French

“Agence de l'innovation de défense”.

. This article has

been written using GNU TeXmacs [28].

. This article has

been written using GNU TeXmacs [28].

Let

We used the tangent Graeffe root finding algorithm from [19,

27] which is a factor of

In this article we discuss the steps of the tangent Graeffe

algorithm, the sub-algorithms that we used, how we parallelized

them, and how we organized the memory so we could factor a

polynomial of degree Polynomial root finding over smooth finite fields is a key ingredient for algorithms for sparse polynomial interpolation that are based on geometric sequences. This application was also one of our main motivations for the present work. |

Consider a polynomial function  over a field

over a field  given through a black box capable of evaluating

given through a black box capable of evaluating  at points in

at points in  .

The problem of sparse interpolation is to recover the

representation of

.

The problem of sparse interpolation is to recover the

representation of  in its usual form, as a linear

combination

in its usual form, as a linear

combination

|

(1) |

of monomials  . One popular

approach to sparse interpolation is to evaluate

. One popular

approach to sparse interpolation is to evaluate  at points in a geometric progression. This approach goes back to work of

Prony in the eighteen's century [41] and became well known

after Ben-Or and Tiwari's seminal paper [2]. It has widely

been used in computer algebra, both in theory [7, 30,

32–35, 39] and in practice

[11, 14, 24, 25, 29, 31]; see [43] for a nice survey.

at points in a geometric progression. This approach goes back to work of

Prony in the eighteen's century [41] and became well known

after Ben-Or and Tiwari's seminal paper [2]. It has widely

been used in computer algebra, both in theory [7, 30,

32–35, 39] and in practice

[11, 14, 24, 25, 29, 31]; see [43] for a nice survey.

More precisely, if a bound  for the number of

terms

for the number of

terms  is known, then we first evaluate

is known, then we first evaluate  at

at  pairwise distinct points

pairwise distinct points  , where

, where  and

and

for all

for all  .

The generating function of the evaluations at

.

The generating function of the evaluations at  satisfies the identity

satisfies the identity

where  and

and  is of degree

is of degree

. The rational function

. The rational function  can be recovered from

can be recovered from  using

fast Padé approximation [6, 37]. For

well chosen points

using

fast Padé approximation [6, 37]. For

well chosen points  , it is

often possible to recover the exponents

, it is

often possible to recover the exponents  from the

values

from the

values  . If the exponents

. If the exponents

are known, then the coefficients

are known, then the coefficients  can also be recovered using a transposed version of fast

multipoint interpolation [5, 7]. This leaves

us with the question how to compute the roots

can also be recovered using a transposed version of fast

multipoint interpolation [5, 7]. This leaves

us with the question how to compute the roots  of

of

in an efficient way.

in an efficient way.

For practical applications in computer algebra, we usually have  , in which case it is most

efficient to use a multi-modular strategy. This means that we rather

work with coefficients in a finite field

, in which case it is most

efficient to use a multi-modular strategy. This means that we rather

work with coefficients in a finite field  ,

where

,

where  is a prime number that we are free to

choose. It is well known that polynomial arithmetic over

is a prime number that we are free to

choose. It is well known that polynomial arithmetic over  can be implemented most efficiently using FFTs when the

order

can be implemented most efficiently using FFTs when the

order  of the multiplicative group is smooth. In

practice, this prompts us to choose

of the multiplicative group is smooth. In

practice, this prompts us to choose  of the form

of the form

for some small

for some small  and such

that

and such

that  fits into a machine word.

fits into a machine word.

The traditional way to compute roots of polynomials over finite fields

is using Cantor and Zassenhaus' method [8]. In [19,

20], alternative algorithms were proposed for our case of

interest when  is smooth. The fastest algorithm

was based on the tangent Graeffe transform and it gains a

factor

is smooth. The fastest algorithm

was based on the tangent Graeffe transform and it gains a

factor  with respect to Cantor–Zassenhaus'

method. The aim of the present paper is to report on a parallel

implementation of this new algorithm and on a few improvements that

allow for a further constant speed-up. A preliminary version of this

paper was published at ICMS 2020 [27]. The new

contributions in this paper include the theoretical contributions in

sections 3.4, 3.5, 3.6 and 3.7, the implementation details in section 4 and

the (parallel) timing results in section 5.

with respect to Cantor–Zassenhaus'

method. The aim of the present paper is to report on a parallel

implementation of this new algorithm and on a few improvements that

allow for a further constant speed-up. A preliminary version of this

paper was published at ICMS 2020 [27]. The new

contributions in this paper include the theoretical contributions in

sections 3.4, 3.5, 3.6 and 3.7, the implementation details in section 4 and

the (parallel) timing results in section 5.

In section 2, we start by recalling generalities about the Graeffe transform and the heuristic root finding method based on the tangent Graeffe transform from [19]. In section 3, we present the main new theoretical improvements, which all rely on optimizations in the FFT-model for fast polynomial arithmetic. Our contributions are threefold:

In the FFT-model, one backward transform out of four can be saved for Graeffe transforms of order two (see section 3.2).

When composing a large number of Graeffe transforms of order two,

“FFT caching” [3] can be used to gain

another factor of  (see section 3.3).

(see section 3.3).

All optimizations still apply in the TFT model, which can save a factor between one and two, depending on the number of roots (see section 3.5).

In section 3.4 we also indicate how to generalize our

methods to Graeffe transforms of general orders. In section 3.7

we determine how much faster the tangent Graeffe algorithm is than the

Cantor–Zassenhaus algorithm. To do this, we determine the constant

factors in the complexities of both algorithms, under the assumption

that arithmetic in  is done using FFT-based

algorithms.

is done using FFT-based

algorithms.

We first implemented a sequential version of the tangent Graeffe method

in C, with the optimizations from sections 3.2 and 3.3;

see [27]. Section 4 is devoted to a more

elaborate parallel implementation in Cilk C. We detail how we

parallelized the different steps of the algorithm, and how we organized

the memory so we could factor a polynomial of degree  over

over  , for

, for  . We believe we are the first to factor such

large polynomials. Our code is available here

. We believe we are the first to factor such

large polynomials. Our code is available here

In the last section 5, we give timings. We used a

multi-core computer with two 10 core Intel Xeon E5 2680 v2 CPUs and 128

gigabytes of RAM. The above factorization of a polynomial of degree  then takes just under 4,000 seconds on 10 cores and

uses 121 gigabytes of RAM.

then takes just under 4,000 seconds on 10 cores and

uses 121 gigabytes of RAM.

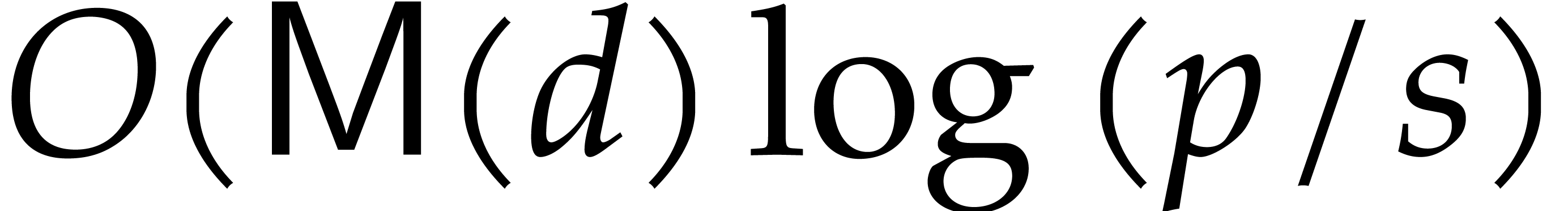

Let  be an effective field. Throughout the paper,

time complexities count arithmetic operations in the field

be an effective field. Throughout the paper,

time complexities count arithmetic operations in the field  and space complexities are for elements of

and space complexities are for elements of  . We use

. We use  to denote the

time for multiplying two polynomials in

to denote the

time for multiplying two polynomials in  of

degree

of

degree  . We make the

customary assumption that

. We make the

customary assumption that  is a non-decreasing

function that tends to infinity.

is a non-decreasing

function that tends to infinity.

We will chiefly be interested in the case when  , where

, where  is a prime of the

form

is a prime of the

form  with

with  small. We call

primes of this form smooth Fourier primes. Some examples that

we use and their bit lengths are as follows:

small. We call

primes of this form smooth Fourier primes. Some examples that

we use and their bit lengths are as follows:

For  and

and  of this form and

of this form and

, we have

, we have  , by using FFT-multiplication.

, by using FFT-multiplication.

Let  be a polynomial of degree

be a polynomial of degree  in

in  which has

which has  distinct

roots in

distinct

roots in  . The tangent

Graeffe algorithm computes the roots of

. The tangent

Graeffe algorithm computes the roots of  .

The cost of the algorithm depends on a parameter

.

The cost of the algorithm depends on a parameter  with

with  . The parameter

. The parameter  determines what proportion of the roots are found in

each iteration of the algorithm. The space complexity of the algorithm

is

determines what proportion of the roots are found in

each iteration of the algorithm. The space complexity of the algorithm

is  and the average time complexity is

and the average time complexity is

Theoretically, choosing  yields the best time

complexity. However, because we want to factor polynomials with very

large

yields the best time

complexity. However, because we want to factor polynomials with very

large  , our implementation

chooses a smaller

, our implementation

chooses a smaller  in the interval

in the interval  to save space. For

to save space. For  the time

complexity is

the time

complexity is

In this section, we recall the tangent Graeffe algorithm from [19]. In the next section, we will analyze its complexity in the FFT-model and present several improvements.

Let  be a general field. The traditional

Graeffe transform of a monic polynomial

be a general field. The traditional

Graeffe transform of a monic polynomial  of degree

of degree  is the unique polynomial

is the unique polynomial  of degree

of degree  such that

such that

|

(2) |

If  is monic, then so is

is monic, then so is  . If

. If  splits over

splits over  into linear factors

into linear factors  ,

then one has

,

then one has

More generally, given  , we

define the Graeffe transform of order

, we

define the Graeffe transform of order  to be the polynomial

to be the polynomial  of degree

of degree  given by

given by

If  , then

, then

If  is a primitive

is a primitive  -th

root of unity in

-th

root of unity in  , then we

also have

, then we

also have

|

(3) |

If  , then we finally have

, then we finally have

|

(4) |

Let  be a formal indeterminate with

be a formal indeterminate with  . Elements in

. Elements in  are

called tangent numbers. They are of the form

are

called tangent numbers. They are of the form  with

with  and basic arithmetic operations go as

follows:

and basic arithmetic operations go as

follows:

Now let  be a monic polynomial of degree

be a monic polynomial of degree  that splits over

that splits over  :

:

where  are pairwise distinct. Then the

tangent deformation

are pairwise distinct. Then the

tangent deformation  satisfies

satisfies

The definitions from the previous subsection readily extend to

coefficients in  instead of

instead of  . Given

. Given  ,

we call

,

we call  the tangent Graeffe transform

of

the tangent Graeffe transform

of  of order

of order  .

We have

.

We have

where

Now assume that we have an efficient way to determine the roots  of

of  . For

some polynomial

. For

some polynomial  , we may

decompose

, we may

decompose

For any root  of

of  ,

we then have

,

we then have

Whenever  happens to be a single root of

happens to be a single root of  , it follows that

, it follows that

If  , this finally allows us

to recover

, this finally allows us

to recover  as

as

Assume now that  is a finite field, where

is a finite field, where  is a prime number of the form

is a prime number of the form  for some small

for some small  . Assume also

that

. Assume also

that  is a primitive element of order

is a primitive element of order  for the multiplicative group of

for the multiplicative group of  .

.

Let  be as in the previous subsection. The

tangent Graeffe method can be used to efficiently compute those

be as in the previous subsection. The

tangent Graeffe method can be used to efficiently compute those  of

of  for which

for which  is a single root of

is a single root of  . In

order to guarantee that there are a sufficient number of such roots, we

first replace

. In

order to guarantee that there are a sufficient number of such roots, we

first replace  by

by  for a

random shift

for a

random shift  , and use the

following heuristic:

, and use the

following heuristic:

For any subset  of cardinality

of cardinality  and any

and any  ,

there exist at least

,

there exist at least  elements

elements  such that

such that  contains at least

contains at least

elements.

elements.

For a random shift  and any

and any  , the assumption ensures with probability at

least

, the assumption ensures with probability at

least  that

that  has at least

has at least

single roots.

single roots.

Now take  to be the largest power of two such

that

to be the largest power of two such

that  and let

and let  .

By construction, note that

.

By construction, note that  .

The roots

.

The roots  of

of  are all

are all

-th roots of unity in the set

-th roots of unity in the set

, where

, where  . We may thus determine them by evaluating

. We may thus determine them by evaluating  at

at  for

for  . Since

. Since  ,

this can be done efficiently using a discrete Fourier transform.

Combined with the tangent Graeffe method from the previous subsection,

this leads to the following probabilistic algorithm for root finding:

,

this can be done efficiently using a discrete Fourier transform.

Combined with the tangent Graeffe method from the previous subsection,

this leads to the following probabilistic algorithm for root finding:

Algorithm

|

||||||

Remark  we may use

we may use  ,

which requires three polynomial multiplications in

,

which requires three polynomial multiplications in  of degree

of degree  . In total, step 5

therefore performs

. In total, step 5

therefore performs  such multiplications. We

discuss how to perform step 5 efficiently in the FFT model

in section 3.

such multiplications. We

discuss how to perform step 5 efficiently in the FFT model

in section 3.

Remark  for

for

and the resulting threshold

and the resulting threshold  for

for  . For larger values of

. For larger values of

, the computations of the

DFTs in step 6 get more expensive, but the proportion of

single roots goes up, so more roots are determined at each iteration.

From an asymptotic complexity perspective, it would be best to take

, the computations of the

DFTs in step 6 get more expensive, but the proportion of

single roots goes up, so more roots are determined at each iteration.

From an asymptotic complexity perspective, it would be best to take

. In practice, we actually

preferred to take the lower threshold

. In practice, we actually

preferred to take the lower threshold  , because the constant factor of our implementation

of step 6 (based on Bluestein's algorithm [4])

is significant with respect to our highly optimized implementation of

the tangent Graeffe method. A second reason we prefer

, because the constant factor of our implementation

of step 6 (based on Bluestein's algorithm [4])

is significant with respect to our highly optimized implementation of

the tangent Graeffe method. A second reason we prefer  of size

of size  instead of

instead of  is

that the total space used by the algorithm is linear in

is

that the total space used by the algorithm is linear in  .

.

Remark  terms, the idea is to take

terms, the idea is to take  and

and  of order

of order  instead of

instead of  for

some constant

for

some constant  with

with  .

This reduces

.

This reduces  (and the cost of the top-level

iteration) by a factor of

(and the cost of the top-level

iteration) by a factor of  .

For the recursive calls, we still need to work with a primitive root of

unity

.

For the recursive calls, we still need to work with a primitive root of

unity  of order

of order  and

random shifts.

and

random shifts.

Assume that  is invertible in

is invertible in  and let

and let  be a primitive

be a primitive  -th root of unity. Consider a polynomial

-th root of unity. Consider a polynomial  . Then the discrete Fourier

transform (DFT) of order

. Then the discrete Fourier

transform (DFT) of order  of the sequence

of the sequence  is defined by

is defined by

We will write  for the cost of one discrete

Fourier transform in terms of the number of operations in

for the cost of one discrete

Fourier transform in terms of the number of operations in  . We assume that

. We assume that  .

For any

.

For any  , we have

, we have

|

(5) |

If  is invertible in

is invertible in  , then it follows that

, then it follows that  .

The costs of direct and inverse transforms therefore coincide up to a

factor

.

The costs of direct and inverse transforms therefore coincide up to a

factor  .

.

If  is composite,

is composite,  ,

and

,

and  , then we have

, then we have

This shows that a DFT of length  reduces to

reduces to  transforms of length

transforms of length  plus

plus

transforms of length

transforms of length  plus

plus  multiplications in

multiplications in  :

:

In particular, if  , then

, then

.

.

It is sometimes convenient to apply DFTs directly to polynomials as

well; for this reason, we also define  .

Given two polynomials

.

Given two polynomials  with

with  , we may then compute the product

, we may then compute the product  using

using

In particular, we obtain  ,

where we recall that

,

where we recall that  stands for the cost of

multiplying two polynomials of degree

stands for the cost of

multiplying two polynomials of degree  .

.

Remark  .

Since

.

Since  is a power of two, this length is of the

form

is a power of two, this length is of the

form  for some

for some  .

In view of (6), we may therefore reduce step 6

to

.

In view of (6), we may therefore reduce step 6

to  DFTs of length

DFTs of length  plus

plus

DFTs of length

DFTs of length  .

If

.

If  is very small, then we may use a naive

implementation for DFTs of length

is very small, then we may use a naive

implementation for DFTs of length  .

In general, one may use Bluestein's algorithm [4] to reduce

the computation of a DFT of length

.

In general, one may use Bluestein's algorithm [4] to reduce

the computation of a DFT of length  into the

computation of a product in

into the

computation of a product in  ,

which can in turn be computed using FFT-multiplication and three DFTs of

length a larger power of two.

,

which can in turn be computed using FFT-multiplication and three DFTs of

length a larger power of two.

Let  be a field with a primitive

be a field with a primitive  -th root of unity

-th root of unity  .

Let

.

Let  be a polynomial of degree

be a polynomial of degree  . Then the relation (2) yields

. Then the relation (2) yields

|

(7) |

For any  , we further note

that

, we further note

that

|

(8) |

so  can be obtained from

can be obtained from  using

using  transpositions of elements in

transpositions of elements in  . Concerning the inverse transform, we also

note that

. Concerning the inverse transform, we also

note that

for  . Plugging this into (7), we conclude that

. Plugging this into (7), we conclude that

This leads to the following algorithm for the computation of  :

:

be a primitive

be a primitive  -th root of unity in

-th root of unity in  and assume that

and assume that  is invertible in

is invertible in  . Given a monic polynomial

. Given a monic polynomial  with

with  , we can compute

, we can compute  in time

in time

Proof. We have already explained the correctness

of Algorithm 2. Step 1 requires one forward DFT of length

and cost

and cost  .

Step 2 can be done in linear time

.

Step 2 can be done in linear time  .

Step 3 requires one inverse DFT of length

.

Step 3 requires one inverse DFT of length  and

cost

and

cost  . The total cost of

Algorithm 2 is therefore

. The total cost of

Algorithm 2 is therefore  .

.

Remark  This

gives a

This

gives a  improvement over the previously best

known bound

improvement over the previously best

known bound  that was used in [19].

Note that the best known algorithm for computing squares of polynomials

of degree

that was used in [19].

Note that the best known algorithm for computing squares of polynomials

of degree  is

is  .

It would be interesting to know whether squares can also be computed in

time

.

It would be interesting to know whether squares can also be computed in

time  .

.

In view of (4), Graeffe transforms of power of two orders

can be computed using

can be computed using

|

(9) |

Now assume that we computed the first Graeffe transform  using Algorithm 2 and that we wish to apply a second

Graeffe transform to the result. Then we note that

using Algorithm 2 and that we wish to apply a second

Graeffe transform to the result. Then we note that

|

(10) |

is already known for  . We can

use this to accelerate step 1 of the second application of Algorithm 2. Indeed, in view of (6) for

. We can

use this to accelerate step 1 of the second application of Algorithm 2. Indeed, in view of (6) for  and

and  , we have

, we have

|

(11) |

for  . In order to exploit

this idea in a recursive fashion, it is useful to modify Algorithm 2 so as to include

. In order to exploit

this idea in a recursive fashion, it is useful to modify Algorithm 2 so as to include  in the input and

in the input and

in the output. This leads to the following

algorithm:

in the output. This leads to the following

algorithm:

Algorithm

|

||||||

|

be a primitive

be a primitive  -th root of unity in

-th root of unity in  and assume that

and assume that  is invertible in

is invertible in

. Given a monic polynomial

. Given a monic polynomial

with

with  and

and  , we can compute

, we can compute  in

time

in

time

Proof. It suffices to compute  and then to apply Algorithm 3 recursively,

and then to apply Algorithm 3 recursively,  times. Every application of Algorithm 3 now takes

times. Every application of Algorithm 3 now takes  operations in

operations in  ,

whence the claimed complexity bound.

,

whence the claimed complexity bound.

Remark  were directly

computed using the formula (9), using

were directly

computed using the formula (9), using  operations in

operations in  . The new

algorithm is twice as fast for large

. The new

algorithm is twice as fast for large  .

.

The algorithms from subsections 3.2 and 3.3

readily generalize to Graeffe transforms of order  for arbitrary

for arbitrary  , provided that

we have an

, provided that

we have an  -th root of unity

-th root of unity

. For convenience of the

reader, we specified the generalization of Algorithm 3

below, together with the resulting complexity bounds.

. For convenience of the

reader, we specified the generalization of Algorithm 3

below, together with the resulting complexity bounds.

Algorithm

|

||||||

|

be a primitive

be a primitive  -th root of unity in

-th root of unity in  , where

, where  is invertible in

is invertible in

. Given a monic polynomial

. Given a monic polynomial

with

with  and

and  , we can compute

, we can compute  in

time

in

time

Proof. Straightforward generalization of

Proposition 7.

be a primitive

be a primitive  -th root of unity in

-th root of unity in  , where

, where  are invertible in

are invertible in

. Given a monic polynomial

. Given a monic polynomial

with

with  and

and  , we can compute

, we can compute  in

time

in

time

Proof. Direct consequence of (4).

Remark  . In terms of the size

. In terms of the size  of

of

, it is instructive to

observe that the “average cost”

, it is instructive to

observe that the “average cost”

is minimal for  . This

suggests that it might be interesting to use Graeffe transforms of order

three whenever possible. In the application of Algorithm 1,

this would lead us to take primes of the form

. This

suggests that it might be interesting to use Graeffe transforms of order

three whenever possible. In the application of Algorithm 1,

this would lead us to take primes of the form  , with

, with  small and

small and  close to

close to  . This

still allows us to use radix 2 FFTs, while at the same time benefitting

from radix 3 Graeffe transforms.

. This

still allows us to use radix 2 FFTs, while at the same time benefitting

from radix 3 Graeffe transforms.

If  is a fixed finite field, then DFTs are most

efficient for sizes

is a fixed finite field, then DFTs are most

efficient for sizes  that divide

that divide  . For our root finding application, it is often

convenient to take

. For our root finding application, it is often

convenient to take  , in which

case

, in which

case  should be a power of two or three times a

power of two. The truncated Fourier transform was developed for the

multiplication of polynomials such that the degree of the product does

not have a nice size

should be a power of two or three times a

power of two. The truncated Fourier transform was developed for the

multiplication of polynomials such that the degree of the product does

not have a nice size  of this type. It turns out

that we may also use it for the efficient computation of Graeffe

transforms of polynomials of arbitrary degrees. Moreover, the

optimizations from the previous subsections still apply.

of this type. It turns out

that we may also use it for the efficient computation of Graeffe

transforms of polynomials of arbitrary degrees. Moreover, the

optimizations from the previous subsections still apply.

Let us briefly describe how the truncated Fourier transform can be used

for the computation of Graeffe transforms of power of two orders. With

the notations from subsections 3.2 and 3.3, we

assume that  is a power of two as well and that

we wish to compute the Graeffe transform of a polynomial

is a power of two as well and that

we wish to compute the Graeffe transform of a polynomial  of degree

of degree  with

with  . Let

. Let  denote the

reversal of a binary number

denote the

reversal of a binary number  of

of  bits. For instance,

bits. For instance,  and

and  . Then the truncated Fourier of

. Then the truncated Fourier of  at order

at order  is defined by

is defined by

It has been shown in [22] that  and

and

can both be computed in time

can both be computed in time  . More generally, for direct transforms, one

may compute

. More generally, for direct transforms, one

may compute

in time  , whenever

, whenever  . For generalizations to arbitrary

radices, we refer to [36].

. For generalizations to arbitrary

radices, we refer to [36].

Taking  , we note that

, we note that

for  . This provides us with

the required counterpart of (8) for retrieving

. This provides us with

the required counterpart of (8) for retrieving  efficiently from

efficiently from  .

The relation (10) also has a natural counterpart:

.

The relation (10) also has a natural counterpart:

for  . This leads to the

following refinement of Algorithm 3:

. This leads to the

following refinement of Algorithm 3:

Algorithm

|

||||||

|

be a primitive

be a primitive  -th root of unity in

-th root of unity in  , where

, where  ,

and assume that

,

and assume that  is invertible in

is invertible in  . Given a monic polynomial

. Given a monic polynomial  with

with  and

and  ,

we can compute

,

we can compute  in time

in time

Proof. Straightforward adaptation of the proof

of Proposition 7, while using [22].

In step 3 of Algorithm 1, we still need an

algorithm to compute the Taylor shift  .

If the characteristic of

.

If the characteristic of  exceeds

exceeds  , then it is (not so) well known [1,

Lemma 3] that this can be done in time

, then it is (not so) well known [1,

Lemma 3] that this can be done in time  ,

using the following reduction to a single polynomial multiplication of

degree

,

using the following reduction to a single polynomial multiplication of

degree  :

:

It is interesting to observe that Taylor shifts can still be computed in

time  in small characteristic, as follows:

in small characteristic, as follows:

We give a theoretical comparison of Algorithm 1 with the

Cantor–Zassenhaus algorithm [8], where both

algorithms have been optimized in the “FFT model” [44].

For this comparison, it is convenient to replace the “worst

case” heuristic H by a more empirical assumption.

More precisely, if we take  for

for  , then the expected proportion of single roots

is

, then the expected proportion of single roots

is  (see e.g. [23]).

This expected proportion is indeed observed in practice: see Table 3. In Algorithm 1, we took

(see e.g. [23]).

This expected proportion is indeed observed in practice: see Table 3. In Algorithm 1, we took  .

.

instead of

instead of  for some fixed

for some fixed  .

Then the expected cost of Algorithm 1 is bounded by

.

Then the expected cost of Algorithm 1 is bounded by

|

(12) |

Proof. We first analyze the cost of step 9. Let  be the cost of the polynomial

multiplications in the product tree algorithm, where

be the cost of the polynomial

multiplications in the product tree algorithm, where  is the size of

is the size of  . Exercise

10.3 of [17] shows that

. Exercise

10.3 of [17] shows that  .

The recurrence for

.

The recurrence for  is

is  for

for  and

and  .

Solving this recurrence while using the assumption that

.

Solving this recurrence while using the assumption that  is non-decreasing yields

is non-decreasing yields  .

Now let

.

Now let  be the successive sizes of

be the successive sizes of  for the recursive calls of the algorithm, so that

for the recursive calls of the algorithm, so that  . Thus the cost of all executions

of step 9 is

. Thus the cost of all executions

of step 9 is  .

Using again that

.

Using again that  is non-decreasing, we have

is non-decreasing, we have

.

.

Let us next analyze the cost of the other steps until step 10

inclusive, but without the recursive calls in step 11. Set

.

.

Step 4 takes  to compute

to compute  .

.

Altogether, the cost of these steps is  .

.

We now need to account for the cost of the recursive calls in step 11. For  the tangent Graeffe algorithm

will, under our hypothesis, on average, obtain at least

the tangent Graeffe algorithm

will, under our hypothesis, on average, obtain at least  of the roots in each recursive call, leaving slightly less than

of the roots in each recursive call, leaving slightly less than  of them to be found. Since

of them to be found. Since  and

and  is non-decreasing, the total cost of

Algorithm 1 is therefore as claimed.

is non-decreasing, the total cost of

Algorithm 1 is therefore as claimed.

Remark  ,

the bound (12) reduces to

,

the bound (12) reduces to  .

Since we may pick

.

Since we may pick  as large as we want, the

complexity of Algorithm 1 is then bounded by

as large as we want, the

complexity of Algorithm 1 is then bounded by  for any

for any  .

.

Assume from now on that we are in the asymptotic region where  . Then Remark 14 shows

that the cost of the tangent Graeffe method is

. Then Remark 14 shows

that the cost of the tangent Graeffe method is  for any

for any  . The bottleneck of

Cantor–Zassenhaus' algorithm in this region is modular

exponentiation, which accounts for a total cost of

. The bottleneck of

Cantor–Zassenhaus' algorithm in this region is modular

exponentiation, which accounts for a total cost of  [17]. We wish to determine the constant factor in order to

give an accurate comparison between the two algorithms. Given

polynomials

[17]. We wish to determine the constant factor in order to

give an accurate comparison between the two algorithms. Given

polynomials  with

with  ,

one modular exponentiation does

,

one modular exponentiation does  modular

squarings

modular

squarings

in a loop. However, in the first few iterations, the degree of  is less then

is less then  so no division is

needed. The number of steps with divisions is

so no division is

needed. The number of steps with divisions is  . Using fast division [17], the

remainder

. Using fast division [17], the

remainder  can be computed with two

multiplications of size

can be computed with two

multiplications of size  assuming the required

inverse of

assuming the required

inverse of  , the reciprocal

of

, the reciprocal

of  , is precomputed. In the

FFT model, one forward transform of

, is precomputed. In the

FFT model, one forward transform of  may be saved

and the forward transform of the inverse of

may be saved

and the forward transform of the inverse of  may

be cached [44]. This costs

may

be cached [44]. This costs  ,

i.e. at least

,

i.e. at least  ,

since

,

since  . The cost of the

top-level modular exponentiation is therefore equivalent to

. The cost of the

top-level modular exponentiation is therefore equivalent to  . Using a similar recursion as for

. Using a similar recursion as for  in the proof of Proposition 13, the total

cost of all modular compositions in CZ is equivalent to

in the proof of Proposition 13, the total

cost of all modular compositions in CZ is equivalent to  (strictly speaking, this is really an upper bound; but we indeed obtain

an equivalence for common values of

(strictly speaking, this is really an upper bound; but we indeed obtain

an equivalence for common values of  ,

such as

,

such as  or

or  ).

Altogether, this means that the tangent Graeffe algorithm is about

).

Altogether, this means that the tangent Graeffe algorithm is about  times faster than Cantor–Zassenhaus.

times faster than Cantor–Zassenhaus.

Remark  , and the

complexity of Cantor–Zassenhaus also involves another

, and the

complexity of Cantor–Zassenhaus also involves another  term due to gcd computations. The corresponding constant

factor is fairly high, which makes the tangent Graeffe algorithm even

more favorable in this asymptotic region.

term due to gcd computations. The corresponding constant

factor is fairly high, which makes the tangent Graeffe algorithm even

more favorable in this asymptotic region.

For our implementation, we have been pursuing three goals. We first

wanted to reimplement the tangent Graeffe algorithm from scratch using

FFT-based polynomial arithmetic and compare it with a good

implementation of the Cantor–Zassenhaus algorithm. Second, we

wanted to factor a polynomial of degree  .

For this, it is important to consider space efficiency. Third, we wanted

to parallelize the tangent Graeffe algorithm for a multi-core computer.

Unlike the Cantor–Zassenhaus algorithm, the tangent Graeffe

algorithm is gcd-free which makes it easier to parallelize. We first

tried parallelizing only the FFTs that appear in steps 3,

5, 6, 9, and 10 of

Algorithm 1 to see if this alone is sufficient to obtain

good parallel speedup. It isn't.

.

For this, it is important to consider space efficiency. Third, we wanted

to parallelize the tangent Graeffe algorithm for a multi-core computer.

Unlike the Cantor–Zassenhaus algorithm, the tangent Graeffe

algorithm is gcd-free which makes it easier to parallelize. We first

tried parallelizing only the FFTs that appear in steps 3,

5, 6, 9, and 10 of

Algorithm 1 to see if this alone is sufficient to obtain

good parallel speedup. It isn't.

Our main practical challenge is, however, space, not time. For a 64 bit

prime  , and an input

polynomial of degree

, and an input

polynomial of degree  , the

input polynomial and output roots alone need 16 gigabytes of storage.

Our first implementation exceeded the virtual memory of our machine

which has 128 gigabytes of RAM plus 240 gigabytes of SSD swap space.

, the

input polynomial and output roots alone need 16 gigabytes of storage.

Our first implementation exceeded the virtual memory of our machine

which has 128 gigabytes of RAM plus 240 gigabytes of SSD swap space.

To reduce space we use in-place algorithms. In-place algorithms do not allocate new space (memory). They work in the input and output space and we allow them to be given additional working space. To parallelize a recursive in-place algorithm, we must partition the input, output and the temporary memory (if any) for recursive parallel calls. Our design is such that the space used does not increase with more cores. We refer the reader to Giorgi, Grene and Roche [18] for examples of in-place serial algorithms in computer algebra and a bibliography.

Number theoretic FFTs over  were first introduced

by Pollard [40]. Let us first explain how we parallelized

such FFTs using Cilk C. The strategy is classical, but it is convenient

to detail it here, since we use the same strategy for parallelizing the

other steps in Algorithm 1. Cilk [16] was

designed to support the parallelization of recursive divide and conquer

algorithms like the FFT for multi-core computers. To parallelize

recursive algorithms using Cilk we first modify the code so that the

memory locations that are updated in the recursive calls do not overlap.

were first introduced

by Pollard [40]. Let us first explain how we parallelized

such FFTs using Cilk C. The strategy is classical, but it is convenient

to detail it here, since we use the same strategy for parallelizing the

other steps in Algorithm 1. Cilk [16] was

designed to support the parallelization of recursive divide and conquer

algorithms like the FFT for multi-core computers. To parallelize

recursive algorithms using Cilk we first modify the code so that the

memory locations that are updated in the recursive calls do not overlap.

Figure 1 shows our sequential C code for two radix 2 FFTs that we use. In the vernacular fft1 is known as the “decimation-in-time” FFT and fft2 as the “decimation-in-frequency” FFT. We refer the reader to Chu and George [9] for a discussion of the two FFTs. We note that Geddes, Czapor, and Labahn present only fft1 in their book on computer algebra [10], whereas von zur Gathen and Gerhard present only fft2 [17].

In the code in Figure 1, LONG is a macro

for long long int, a 64 bit signed integer. The C

functions addmod, submod, mulmod

implement  in

in  respectively for

respectively for  . For

multiplication in

. For

multiplication in  we use Roman Pearce's

implementation of Möller and Granlund [38]. The input

array

we use Roman Pearce's

implementation of Möller and Granlund [38]. The input

array  of size

of size  is the

main input to and output from the FFT. The input

is the

main input to and output from the FFT. The input  is an array of size

is an array of size  containing powers of

containing powers of  , a primitive

, a primitive  -th root of unity in

-th root of unity in  . We precompute

. We precompute

Precomputing

saves asymptotically half of the multiplications in the FFT. For

saves asymptotically half of the multiplications in the FFT. For

,

fft1

and

fft2

both do

,

fft1

and

fft2

both do

multiplications. Duplicating of powers of

multiplications. Duplicating of powers of

means all recursive calls in

fft1

and

fft2

access

means all recursive calls in

fft1

and

fft2

access

sequentially.

sequentially.

|

||||||||||||||||||||||||||||||||||||||||||

Codes like these where the recursive calls update separate parts of the

array

are easy to parallelize using Cilk. To parallelize

fft1

in Cilk C we first make a new subroutine

fft1cilk

which executes the two recursive calls in parallel. In Figure

2

the two Cilk

spawn

directives do this. For small

are easy to parallelize using Cilk. To parallelize

fft1

in Cilk C we first make a new subroutine

fft1cilk

which executes the two recursive calls in parallel. In Figure

2

the two Cilk

spawn

directives do this. For small

we do not want to start two new processes because of the cost of

process management overhead. Thus for

we do not want to start two new processes because of the cost of

process management overhead. Thus for

,

fft1cilk

calls

fft1

which runs sequentially.

,

fft1cilk

calls

fft1

which runs sequentially.

cilk void block1( LONG n2, LONG *A, LONG b, LONG W, LONG p ) {

LONG i,s,t;

for( i=0; i<b; i++ ) {

s = A[i];

t = mulmod(W[i],A[n2+i],p);

A[ i] = addmod(s,t,p);

A[n2+i] = submod(s,t,p);

}

return;

}

#define B 65536

#define FFTCUTOFF 32768

cilk void fft1cilk( LONG *A, LONG n, LONG *W, LONG p ) {

LONG q,r,i;

if( n<=FFTCUTOFF ) { fft1(A,n,W,p); return; }

spawn fft1cilk( A, n2, W+n2, p );

spawn fft1cilk( A+n2, n2, W+n2, p );

sync; // wait for the two fft1cilk calls to finish

n2 = n/2; q = n2/B; r = n2-q*B;

for( i=0; i<q; i++ ) spawn block1( n2, A+i*B, B, W+i*B, p );

if( r>0 ) spawn block1( n2, A+q*B, r, W+q*B, p );

sync; // wait for all blocks to complete

return;

} |

To obtain good parallel speedup we also need to parallelize the for loop

in fft1 because it does  multiplications out of a total of

multiplications out of a total of  multiplications. We do this by executing large contiguous blocks of the

loop in parallel. We could use Cilk's parallel for loop construct cilk_for and let Cilk determine what block size to use but

this does not allow us to tune the block size for best performance.

Instead we choose a blocksize B and parallelize the loop

explicitly as shown in Figure 2. To get best performance,

we reduced B until the Cilk process overhead takes more

than 1% say of the total time for the FFT. This yields good parallel

speedup for many cores when

multiplications. We do this by executing large contiguous blocks of the

loop in parallel. We could use Cilk's parallel for loop construct cilk_for and let Cilk determine what block size to use but

this does not allow us to tune the block size for best performance.

Instead we choose a blocksize B and parallelize the loop

explicitly as shown in Figure 2. To get best performance,

we reduced B until the Cilk process overhead takes more

than 1% say of the total time for the FFT. This yields good parallel

speedup for many cores when  is large.

is large.

An alternative way to parallelize an FFT is to use a recursive FFT for

creating parallel tasks for large  as we have but

use a non-recursive FFT for smaller

as we have but

use a non-recursive FFT for smaller  .

These and other strategies for parallelizing the FFT for multi-core

computers are discussed in [9, 13].

.

These and other strategies for parallelizing the FFT for multi-core

computers are discussed in [9, 13].

Steps 1, 2, 3, 5, and 6 of Algorithm 6 require  time. Steps 3 and 6 involve

time. Steps 3 and 6 involve  inverses in

inverses in  which, because they require many

divisions, are relatively very expensive. All the inverses can be

replaced with multiplications as follows. After computing

which, because they require many

divisions, are relatively very expensive. All the inverses can be

replaced with multiplications as follows. After computing  ! in step 1, we compute the inverse

! in step 1, we compute the inverse  . For steps 3 and 6, we run the

loop backwards and multiply by

. For steps 3 and 6, we run the

loop backwards and multiply by  to get the next

inverse as follows.

to get the next

inverse as follows.

for( i=d; i>1; i-- ) { Pi[i] = mulmod(Pi[i],finv,p); finv = mulmod(finv,i,p); }

We parallelized only the polynomial multiplication  in step 4. To multiply two polynomials of degree at most

in step 4. To multiply two polynomials of degree at most  our FFT multiplication needs three arrays

our FFT multiplication needs three arrays  ,

,  ,

and

,

and  of size

of size  where

where  satisfies

satisfies  .

.

The data in Table 4 shows that the best parallel speedup

for step 3 is 4.12. A factor of 4.12 on 10 cores is not very good and

due to the fact that we did not paralellize steps 1, 3 and 6 which

involve  ,

,  and

and  multiplications in

multiplications in  , respectively. We did not do this because computing

, respectively. We did not do this because computing

is not a bottleneck of Algorithm 1.

is not a bottleneck of Algorithm 1.

Of course, it is possible to paralellize steps 1, 3 and 6. For  blocks of size

blocks of size  we would need to

compute the factorials

we would need to

compute the factorials  in parallel. We could do

this by first computing

in parallel. We could do

this by first computing  partial products

partial products  in parallel and then compute

in parallel and then compute  . Now we can execute step 1 in

. Now we can execute step 1 in  blocks in parallel. Next we would compute the inverses of

blocks in parallel. Next we would compute the inverses of  so we can execute steps 3 and 6 in parallel.

so we can execute steps 3 and 6 in parallel.

Step 5 of Algorithm 1 is the main computation

in Algorithm 1. It has complexity  . We successfully parallelized the FFTs and all

. We successfully parallelized the FFTs and all  loops in blocks except the following data movement:

loops in blocks except the following data movement:

for( i=0; i<n/2; i++ ) A[i] = A[2*i]; // compress for( i=0; i<n/2; i++ ) A[n/2+i] = A[i]; // duplicate

We tried parallelizing the above code by moving the even entries of  into a temporary array

into a temporary array  ,

in blocks, in parallel, then copying them back to

,

in blocks, in parallel, then copying them back to  , twice, in blocks, in parallel. On 6 cores, the

parallel code is slower than the serial code so we have left this pure

data movement not parallelized.

, twice, in blocks, in parallel. On 6 cores, the

parallel code is slower than the serial code so we have left this pure

data movement not parallelized.

Each of the three evaluations  ,

,

and

and  for

for  in step 6 of Algorithm 1 can be done using the

Bluestein transform [4] in

in step 6 of Algorithm 1 can be done using the

Bluestein transform [4] in  time.

Although step 6 does not dominate the time complexity it

needs the most space. We need at least

time.

Although step 6 does not dominate the time complexity it

needs the most space. We need at least  units of

storage to store the values of

units of

storage to store the values of  ,

,

and

and  .

For

.

For  and

and  ,

the requirement

,

the requirement  implies

implies  . This is

. This is  gigabytes. We

chose to save half of this by choosing

gigabytes. We

chose to save half of this by choosing  instead.

This increases the time a little (see Table 3) because we

obtain a smaller portion of the roots at each recursive call of

Algorithm 1.

instead.

This increases the time a little (see Table 3) because we

obtain a smaller portion of the roots at each recursive call of

Algorithm 1.

The Bluestein transform does one multiplication of two polynomials of

degree  plus

plus  work. For a

multiplication of degree

work. For a

multiplication of degree  we need an FFT of size

we need an FFT of size

and our FFT uses

and our FFT uses  units

of temporary storage. For

units

of temporary storage. For  ,

,

and

and  we have

we have  so we need 192 gigabytes. We do not evaluate of

so we need 192 gigabytes. We do not evaluate of  ,

,  and

and  in parallel as that would triple the 192 gigabytes to

576 gigabytes.

in parallel as that would triple the 192 gigabytes to

576 gigabytes.

For  with

with  small, because

small, because

for some

for some  ,

we can save time and space by applying (6). We do

,

we can save time and space by applying (6). We do  DFTs of size

DFTs of size  ,

in blocks, in parallel, then

,

in blocks, in parallel, then  multiplications by

powers of

multiplications by

powers of  , in blocks in

parallel, followed by

, in blocks in

parallel, followed by  FFTs of size

FFTs of size  which we do in parallel. This reduces the time for an

evaluation by a factor between 6 and 12 since we replace 3 FFTs of size

which we do in parallel. This reduces the time for an

evaluation by a factor between 6 and 12 since we replace 3 FFTs of size

where

where  by

by  FFTs of size

FFTs of size  which is equivalent

to one FFT of size

which is equivalent

to one FFT of size  .

.

We need an additional  units of storage for

storing the

units of storage for

storing the  inputs of size

inputs of size  for the

for the  FFTs and an additional

FFTs and an additional  units for the required

units for the required  array. Thus applying (6) reduces the temporary storage needed from

array. Thus applying (6) reduces the temporary storage needed from  units to

units to  units. For

units. For  and

and

, with

, with  , this is

, this is  gigabytes of

temporary storage instead of 192 gigabytes.

gigabytes of

temporary storage instead of 192 gigabytes.

We can now state the total storage needed by our implementation of

Algorithm 1. We need to store the input  of degree

of degree  and an array of size

and an array of size  for the output roots

for the output roots  . We

need space for three temporary polynomials of degree

. We

need space for three temporary polynomials of degree  . Including the memory for step 6,

our implementation uses

. Including the memory for step 6,

our implementation uses  units, which, for

units, which, for  ,

,  and

and  is 121 gigabytes.

is 121 gigabytes.

In step 9 we need to expand the polynomial  . Let

. Let  and suppose

and suppose  is stored in an array of size

is stored in an array of size  and

and  in an array of size

in an array of size  . We can compute

. We can compute  in

in  using fast multiplication and divide and conquer. The

obvious way to do this is to split the roots in

using fast multiplication and divide and conquer. The

obvious way to do this is to split the roots in  into two equal size groups, of size

into two equal size groups, of size  and

and  , then recursively compute

, then recursively compute

and finally multiply  by

by  using fast multiplication for large

using fast multiplication for large  .

In the literature this approach is called the product tree

multiplication algorithm; see [17, Algorithm 10.3]. In

Appendix A, the function mult implements the product

tree algorithm in Magma. We use it to create the input polynomial

.

In the literature this approach is called the product tree

multiplication algorithm; see [17, Algorithm 10.3]. In

Appendix A, the function mult implements the product

tree algorithm in Magma. We use it to create the input polynomial  to get Magma timings. However, mult

is inefficient because all recursive calls allocate new memory for the

inputs and products in the product tree.

to get Magma timings. However, mult

is inefficient because all recursive calls allocate new memory for the

inputs and products in the product tree.

For large products we use our FFT based polynomial multiplication FFTpolmul64s which needs a temporary array  of size

of size  units of storage where

units of storage where  . We do not want to allocate space for

. We do not want to allocate space for  in each recursive call. Fortunately,

in each recursive call. Fortunately,  is a power of 2 so we can divide

is a power of 2 so we can divide  into two halves

and execute the two recursive calls in parallel using the two halves of

into two halves

and execute the two recursive calls in parallel using the two halves of

. But the size of

. But the size of  plus

plus  is

is  . They don't fit in

. They don't fit in  .

The remedy is to note that

.

The remedy is to note that  and

and  are both monic, so we do not need to store their leading coefficients.

are both monic, so we do not need to store their leading coefficients.

Figure 3 presents C code for an in-place product tree

algorithm which uses the input space  of size

of size

, the output space

, the output space  of size

of size  and a temporary array

and a temporary array  of size

of size  for all polynomial

multiplications in the product tree. Our solution splits

for all polynomial

multiplications in the product tree. Our solution splits  asymmetrically into a “large” set of size

asymmetrically into a “large” set of size  where

where  and a

“small” set of size

and a

“small” set of size  .

For example, for

.

For example, for  we use

we use  and

and  . To multiply

. To multiply  we first recursively compute

we first recursively compute  in

in

and

and  in

in  . We copy the contents of

. We copy the contents of  to the input array

to the input array  and we compute the product

and we compute the product

in

in  .

For small

.

For small  , we use polmul64s

an in-place multiplication with quadratic complexity. Then we add

, we use polmul64s

an in-place multiplication with quadratic complexity. Then we add  and

and  to

to  so that

so that  contains

contains  .

Thus we have the following result.

.

Thus we have the following result.

of size

of size  and

and  be an array of size

be an array of size  and

and  an array of size

an array of size  where

where  and

and  .

Assuming

.

Assuming  , Algorithm treemul computes

, Algorithm treemul computes  in

in  arithmetic operations in

arithmetic operations in  using

only the memory of

using

only the memory of  and

and  .

.

In Figure

3

, because the memory for

,

,

and

and

in the two recursive calls is separated, we may execute the two

recursive calls to

treemul

in parallel. This also holds recursively, so we can keep executing

recursive calls in parallel, which is ideal for Cilk. In our parallel

implementation of

treemul

we parallelize the two recursive calls if

in the two recursive calls is separated, we may execute the two

recursive calls to

treemul

in parallel. This also holds recursively, so we can keep executing

recursive calls in parallel, which is ideal for Cilk. In our parallel

implementation of

treemul

we parallelize the two recursive calls if

.

We also parallelize

FFTmul64s

and the FFTs (both the subalgorithms and their calls inside

treemul

). We do not parallelize the two polynomial additions.

.

We also parallelize

FFTmul64s

and the FFTs (both the subalgorithms and their calls inside

treemul

). We do not parallelize the two polynomial additions.

#include <stdlib.h>

#define LONG long long int

// Input polynomials a and b mod p of degree da and db respectively.

// Output array c is space for the sum a + b mod p (product a b mod p)

LONG poladd64s( LONG *a, LONG *b, LONG *c, LONG da, LONG db, LONG p );

LONG polmul64s( LONG *a, LONG *b, LONG *c, LONG da, LONG db, LONG p );

LONG FFTmul64s( LONG *a, LONG *b, LONG *c, LONG da, LONG db, LONG *T, LONG p );

void treemul( LONG *S, LONG n, LONG *Q, LONG *T, LONG p ) {

LONG i,m,d;

if( n==1 ) { Q[0] = submodp(0,S[0],p); return; }

for( m=1; 2*m<n; m=2*m );

d = n-m;

treemul(S,m,Q,T,p); // compute a(x)-x^m in Q[0..m-1] using S[0..m-1] and T[0..3m-1]

treemul(S+m,d,Q+m,T+3*m,p); // and b(x)-x^d in Q[m..n-1] using S[m..n-1] and T[3m..]

for( i=0; i<n; i++ ) S[i] = Q[i]; // S = [a0,a1,…,am-1,b0,b1,…,bd-1]

if( d<10 ) polmul64s(S,S+m,Q,m-1,d-1,p); // in-place classical multiplication

else FFTmul64s(S,S+m,Q,m-1,d-1,T,p); // FFT multiplication using T[0..6m-1]

Q[n-1] = 0;

poladd64s(Q+d,S,Q+d,m-1,m-1,p); // add x^m (b-x^d) to Q[d..n-1]

poladd64s(Q+m,S+m,Q+m,d-1,d-1,p); // add x^d (a-x^m) to Q[m..n-1]

// Q[n] = 1; is okay for this sequential code but not for a parallel code

return;

}

LONG *array64s(LONG n); // return an array of size n

void treeproduct( LONG *S, LONG n, LONG *Q, LONG p ) {

// S is an array of size n and Q of size n+1

LONG N,*T;

for( N=1; N<n; N=2*N );

T = array64s(3*N);

treemul(S,n,Q,T,p);

free(T);

Q[n] = 1;

return;

} |

Figure 3. C code for computing

|

Remark  asymmetrically so that the FFTs are fully

utilized, we gain upto a factor of 2 in speed when

asymmetrically so that the FFTs are fully

utilized, we gain upto a factor of 2 in speed when  is much smaller then

is much smaller then  . Thus

we get the benefit of using a truncated FFT [22] for the

polynomial multiplications without using it.

. Thus

we get the benefit of using a truncated FFT [22] for the

polynomial multiplications without using it.

Remark

We want to compare our new tangent Graeffe implementation with an

implementation of the Cantor–Zassenhaus algorithm [8]

which uses fast polynomial arithmetic. One of the best implementations

that we know of is Magma's Factorize command. Magma uses an

implementation of Shoup's work [44] by Allan Steel [45]. For  of degree

of degree  with

with  distinct roots in

distinct roots in  , Magma uses the Cantor–Zassenhaus algorithm

which has time complexity

, Magma uses the Cantor–Zassenhaus algorithm

which has time complexity  .

.

The timings in Tables 1 and 2 were obtained on

an 8 core Intel Xeon E5-2660 CPU which runs at at 2.2 GHz base and 3.0

GHz turbo. The input polynomials  in Tables 1 and 2 of degree

in Tables 1 and 2 of degree  were

created with

were

created with  random distinct roots in

random distinct roots in  . Table 1 is for the

28.8 bit prime

. Table 1 is for the

28.8 bit prime  and Table 2 is for

the 62.4 bit prime

and Table 2 is for

the 62.4 bit prime  .

.

The timings in column NewTG are for our C implementation of Algorithm 1 with the parameter  chosen in

chosen in  . The timings in column Magma are

for the Factorize command for Magma version V2.25-5. Magma code

for creating

. The timings in column Magma are

for the Factorize command for Magma version V2.25-5. Magma code

for creating  and factoring it is given in

Appendix A. We note that older versions of Magma have a

quadratic sub-algorithm; this problem was fixed by Steel for version

V2.25-5. We also note that Magma has faster arithmetic for primes

and factoring it is given in

Appendix A. We note that older versions of Magma have a

quadratic sub-algorithm; this problem was fixed by Steel for version

V2.25-5. We also note that Magma has faster arithmetic for primes  .

.

The columns in Tables

1

and

2

labeled Step5, Step6, Step9, and

report the time spent in steps

5

,

6

,

9

, and

10

, respectively, in the top level call of Algorithm

1

. They show that initially step

5

, which costs

report the time spent in steps

5

,

6

,

9

, and

10

, respectively, in the top level call of Algorithm

1

. They show that initially step

5

, which costs

,

dominates the cost of steps

6

and

9

which cost

,

dominates the cost of steps

6

and

9

which cost

and

and

respectively. As

respectively. As

increases, so does

increases, so does

,

and the number of iterations

,

and the number of iterations

of step

5

in Algorithm

1

drops; ultimately, the computation of the product

of step

5

in Algorithm

1

drops; ultimately, the computation of the product

in step

9

dominates.

in step

9

dominates.

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

The column labeled %roots reports the percentage of the roots found in

the first tangent Graeffe application. Column  is

the value of

is

the value of  in step 5. Step 5 does

in step 5. Step 5 does  FFTs of size

FFTs of size  . Column FFT reports the time for one of those

FFTs. For example, in Table 2, for

. Column FFT reports the time for one of those

FFTs. For example, in Table 2, for  , 13.9 seconds was spent in step 5. The

code did

, 13.9 seconds was spent in step 5. The

code did  FFTs of size

FFTs of size  which took

which took  seconds.

seconds.

The timings in Table 1 show that the tangent Graeffe method

is 100 times faster than Magma's implementation of

Cantor–Zassenhaus at degree  .

For the larger prime in Table 2 the tangent Graeffe method

is 166 times faster at degree

.

For the larger prime in Table 2 the tangent Graeffe method

is 166 times faster at degree  .

.

Table 3 shows the effect of different choices for the

parameter  in Algorithm 1. If the

roots of

in Algorithm 1. If the

roots of  under the Graeffe transform

under the Graeffe transform  are uniformly distributed among the

are uniformly distributed among the  -th roots of unity, then the proportion of them that

remain distinct is

-th roots of unity, then the proportion of them that

remain distinct is  . Thus

doubling

. Thus

doubling  increases the proportion of roots found

at each recursive level of Algorithm 1, which saves time,

but it also doubles the cost of the evaluations in step 6.

Recall that the theoretically optimal performance is obtained by taking

increases the proportion of roots found

at each recursive level of Algorithm 1, which saves time,

but it also doubles the cost of the evaluations in step 6.

Recall that the theoretically optimal performance is obtained by taking

, where we note that

, where we note that  and

and  for

for  .

.

Column

gives the expected proportion of roots that will be found. Column

%roots is the actual percentage of roots found by Algorithm

1

. Columns Step5, Step6, and Total are the time in step

5

, step

6

, and the total time. The data in Table

3

suggests choosing

gives the expected proportion of roots that will be found. Column

%roots is the actual percentage of roots found by Algorithm

1

. Columns Step5, Step6, and Total are the time in step

5

, step

6

, and the total time. The data in Table

3

suggests choosing

to minimize time. For

to minimize time. For

the algorithm is expected to obtain between

the algorithm is expected to obtain between

and

and

of the roots. Our actual choice of

of the roots. Our actual choice of

increases the time by about 10% but saves a factor of 2 in space.

increases the time by about 10% but saves a factor of 2 in space.

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Table

4

shows the results for our parallel implementation including timings for

of degree

of degree

.

The timings in Table

4

were obtained on a server with a 10 core Intel E5 2680 v2 CPU with 128

gigabytes of RAM running at 3.0GHz base, 3.6GHz turbo. The input

polynomial

.

The timings in Table

4

were obtained on a server with a 10 core Intel E5 2680 v2 CPU with 128

gigabytes of RAM running at 3.0GHz base, 3.6GHz turbo. The input

polynomial

is created as before by choosing

is created as before by choosing

random distinct values from

random distinct values from

with

with

.

.

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Timings in column  are for computing the input

polynomial

are for computing the input

polynomial  using our in-place parallel product

tree multiplication from section 3.4. The total time for computing the

roots of

using our in-place parallel product

tree multiplication from section 3.4. The total time for computing the

roots of  is in column Total. Roughly speaking,

our software takes 10 times longer to compute the roots of

is in column Total. Roughly speaking,

our software takes 10 times longer to compute the roots of  (less for larger

(less for larger  )

than it does to create

)

than it does to create  !

!

Timings in columns Step4, Step5, Step6, Step9, and  are the times for those steps for the top-level call of Algorithm 1. Speedups are given for each computation. The reader can see

that the parallel speedup is not as good for Step4 and the division

are the times for those steps for the top-level call of Algorithm 1. Speedups are given for each computation. The reader can see

that the parallel speedup is not as good for Step4 and the division

. This is because we have

only parallelized the FFTs in them; none of steps of linear cost are

parallelized.

. This is because we have

only parallelized the FFTs in them; none of steps of linear cost are

parallelized.

The motivation for our work is the problem of sparse polynomial

interpolation where one seeks to recover the coefficients and monomials

of a polynomial  with

with  terms from values of

terms from values of  . The

popular approach by Prony and Ben-Or–Tiwari needs only

. The

popular approach by Prony and Ben-Or–Tiwari needs only  values of

values of  but it needs to factor a

polynomial

but it needs to factor a

polynomial  of degree

of degree  which has

which has  distinct roots in

distinct roots in  . Using the Cantor–Zassenhaus (CZ)

algorithm, computing the roots of

. Using the Cantor–Zassenhaus (CZ)

algorithm, computing the roots of  takes

takes  time. Because this is the most expensive step in

Ben-Or/Tiwari sparse interpolation, and because CZ does gcd computations

which are difficult to parallelize, research in sparse interpolation has

tried different approaches that do not require root finding.

time. Because this is the most expensive step in

Ben-Or/Tiwari sparse interpolation, and because CZ does gcd computations

which are difficult to parallelize, research in sparse interpolation has

tried different approaches that do not require root finding.

If we choose the prime  of the form

of the form  with

with  small, the new tangent

Graeffe (TG) algorithm factors

small, the new tangent

Graeffe (TG) algorithm factors  in

in  time. This is

time. This is  faster than CZ, but

the constants actually matter in practice. In this work we improved the

main step of TG by a factor of 2 and we showed that for large

faster than CZ, but

the constants actually matter in practice. In this work we improved the

main step of TG by a factor of 2 and we showed that for large  , TG is faster than CZ by a factor

of

, TG is faster than CZ by a factor

of  . Our new C implementation

of TG is over 100 times faster than Magma's C implementation of CZ for

. Our new C implementation

of TG is over 100 times faster than Magma's C implementation of CZ for

on the tests we made. So TG really is a lot

faster than CZ.

on the tests we made. So TG really is a lot

faster than CZ.

Another goal was to see if we could parallelize TG for multi-core

computers. We found that it was not sufficient to only parallelize the

underlying FFT. We also had to parallelize many sub-algorithms to get

good parallel speedup. Here we contributed a new parallel in-place

product tree algorithm. We were also successful in reducing the space

needed so that we could compute the roots of  of

degree one billion on a 10 core computer with 128 gigabytes of RAM in

about one hour. It should be possible to reduce the time further by

using vectorization [12, 26]. The sequential

implementation of the FFTs could also be further improved using

techniques from [15, 42] and Harvey's approach

for number theoretic FFTs [21].

of

degree one billion on a 10 core computer with 128 gigabytes of RAM in

about one hour. It should be possible to reduce the time further by

using vectorization [12, 26]. The sequential

implementation of the FFTs could also be further improved using

techniques from [15, 42] and Harvey's approach

for number theoretic FFTs [21].

p := 3*29*2^56+1;

p := 7*2^26+1;

Fp := FiniteField(p);

R<x> := PolynomialRing(Fp);

mult := function( L )

n := #L;

if n eq 1 then return x-L[1];

else

m := n div 2;

f := $$( L[1..m] ) * $$( L[m+1..n] );

return f;

end if;

end function;

d := 2^12-1;

S := { Random(Fp) : i in [1..d] };